Selectively Exposing CCE Workloads with a Dedicated Gateway

You can use APIG to selectively expose your workloads and microservices in Cloud Container Engine (CCE).

Expose CCE workloads using either of the following methods. Method 1 is recommended.

-

Method 1: Create a load balance channel on APIG to access pod IP addresses in CCE workloads, dynamically monitoring the changes of these addresses. When opening APIs of a containerized application, specify a load balance channel to access the backend service.

-

Method 2: Import a CCE workload to APIG. APIs and a load balance channel are generated and associated with each other to dynamically monitor pod IP address changes. Expose workloads and microservices in CCE using these APIs.

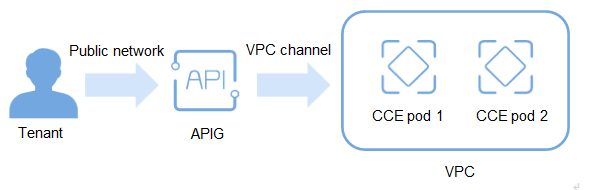

Solution Design

Figure 1 Accessing CCE workloads (composed of pods) through APIG

- You do not need to set elastic IP addresses, reducing network bandwidth costs. Workload addresses in CCE can be accessed through a load balance channel that is manually created or generated by importing a workload.

- Workload pod addresses in CCE can be dynamically monitored and automatically updated by a load balance channel that is manually created or generated by importing a workload. *CCE workloads can be released by tag for testing and version switching.

- Multiple authentication modes keep access secure. *Request throttling policies ensure secure access to your backend service. Instead of direct access to containerized applications, APIG provides request throttling to ensure that your backend service runs stably.

- Pod load balancing improves resource utilization and system reliability.

- Only CCE Turbo clusters and CCE clusters using the VPC network model are supported.

- The CCE cluster and your gateway must be in the same VPC or connected.

- If you select a CCE cluster that uses the VPC network model, add the container CIDR block of the cluster in the Routes area of the gateway details page.

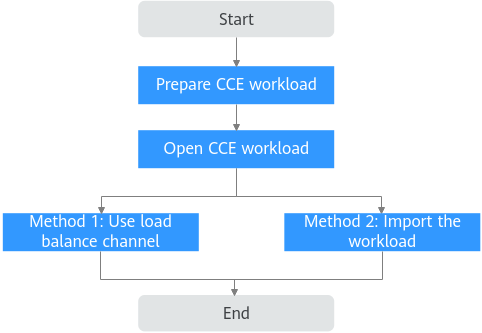

General Procedure

- Prepare CCE workload) Before opening a container workload with APIG, create a CCE cluster that uses the VPC network model or a Turbo cluster on the CCE console.

- Open CCE workload

- (Optional) Configure workload label for grayscale release Grayscale release is a service release policy that gradually switches traffic from an early version to a later version by specifying the traffic distribution weight.

Implementation

Preparing a CCE Workload

- Create a cluster.

- Log in to the CCE console and buy a CCE standard or CCE Turbo cluster on the Clusters page. Select CCE Standard Cluster and set Network Model to VPC network.

- After the cluster is created, record the container CIDR block.

- Add this CIDR block in the Routes area of a dedicated gateway.

- Log in to the APIG console, and choose Gateways in the navigation pane.

- Click the gateway name to go to the details page.

- Add the container CIDR block in the Routes area.

- Create a workload.

- Open a terminal and create the following workloads as Deployments:

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment-v1

labels:

app: deployment-demo

version: v1

spec:

replicas: 2

selector:

matchLabels:

app: deployment-demo

version: v1

template:

metadata:

labels:

app: deployment-demo

version: v1

spec:

containers:

- name: whoami

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: whoami-service-v1

spec:

selector:

app: deployment-demo

version: v1

ports:

- protocol: TCP

port: 80

targetPort: 80

and

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment-v2

labels:

app: deployment-demo

version: v2

spec:

replicas: 2

selector:

matchLabels:

app: deployment-demo

version: v2

template:

metadata:

labels:

app: deployment-demo

version: v2

spec:

containers:

- name: whoami

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: whoami-service-v2

spec:

selector:

app: deployment-demo

version: v2

ports:

- protocol: TCP

port: 80

targetPort: 80

- Provision the manifest with kubectl:

kubectl apply -f version1.yaml

kubectl apply -f version2.yaml

Method 1: Opening a CCE Workload by Creating a Load Balance Channel

- Create a load balance channel.

- Go to the APIG console, and choose Gateways in the navigation pane.

- Choose API Management -> API Policies.

- On the Load Balance Channels tab, click Create Load Balance Channel.

- Set the basic information.

Table 1: Basic information parameters

| Parameter | Description |

|---|---|

| Name | Enter a name that conforms to specific rules to facilitate search. In this example, enter VPC_demo . |

| Port | Container port of a workload for opening services. Set this parameter to 80 , which is the default HTTP port. |

| Routing Algorithm | Select WRR . This algorithm will be used to forward requests to each of the cloud servers you select in the order of server weight. |

| Type | Select Microservice |

-

Configure microservice information.

Table 2 Microservice configuration

| Parameter | Description |

|---|---|

| Microservice Type | Cloud Container Engine (CCE) is always selected. |

| Cluster | Select the created cluster. |

| Namespace | Select a namespace in the cluster. In this example, select default. |

| Workload Type | Select Deployment. This parameter must be the same as the type of the created workload. |

| Service Label Key | Select the pod label app of the created workload. |

| Service Label Value | Select the label value deployment-demo. |

-

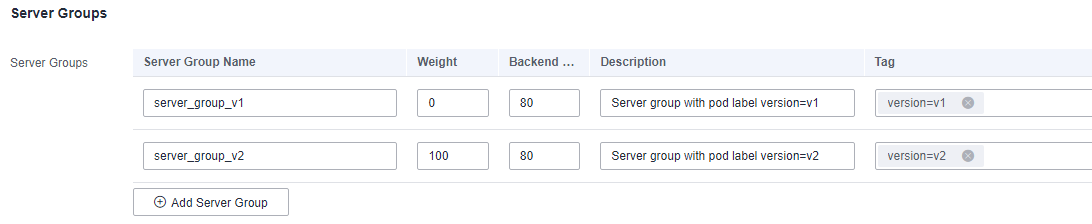

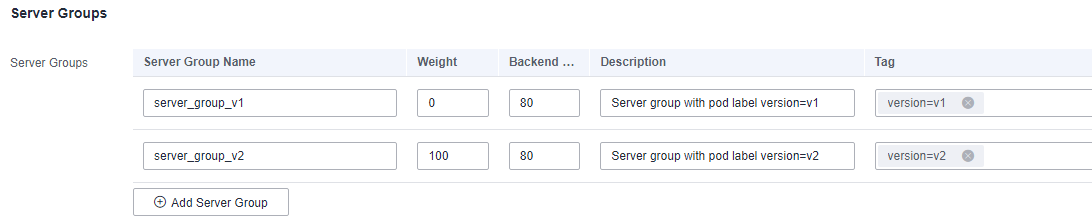

Configure a server group.

Table 3 Server group configuration

| Parameter | Description |

|---|---|

| Server Group Name | Enter server_group_v1 . |

| Weight | Enter 1 . |

| Backend Service Port | Enter 80 . This must be the same as the container port in the workload. |

| Description | Enter "Server group with version v1". |

| Tag | Select the pod label version=v1 of the created workload. |

-

Configure health check.

Table 4 Health check configuration

| Parameter | Description |

|---|---|

| Protocol | Default: TCP . |

| Check Port | Backend server port in the channel. |

| Healthy threshold | Default: 2 . This is the number of consecutive successful checks required for a cloud server to be considered healthy. |

| Unhealthy Threshold | Default: 5 . This is the number of consecutive failed checks required for a cloud server to be considered unhealthy. |

| Timeout (s) | Default: 5 . This is the timeout used to determine whether a health check has failed. |

| Interval (s) | Default: 10 . This is the interval between consecutive checks. |

-

Click Finish.

In the load balance channel list, click a channel name to view details.

-

Open an API.

- Create an API group.

- Choose API Management -> API Groups.

- Click Create API Group, and choose Create Directly.

- Configure group information and click OK.

- Create an API and bind the preceding load balance channel to it.

- Click the group name to go to the details page. On the APIs tab, choose Create API -> Create API.

- Configure frontend information and click Next.

- Create an API group.

Table 5 Frontend configuration

| Parameter | Description |

|---|---|

| Group | Select the preceding API group. |

| URL | Method : Request method of the API. Set this parameter to ANY. |

| Protocol | Request protocol of the API. Set this parameter to HTTPS . |

| Subdomain Name | The system automatically allocates a subdomain name to each API group for internal testing. The subdomain name can be accessed 1000 times a day. |

| Path | Path for requesting the API. |

| Gateway Response | Select a response to be displayed if the gateway fails to process an API request. Default: default. |

| Authentication Mode | API authentication mode. Select None. |

Authentication Mode None is not recommended for actual services. All users will be granted access to the API.

-

Configure backend information and click Next.

Table 6 Parameters for defining an HTTP/HTTPS backend service

| Parameter | Description |

|---|---|

| URL | Method : Request method of the API. Set this parameter to ANY. |

| Protocol | Set this parameter to HTTP. |

| Load Balance Channel | Select the created channel. |

| Path | Path of the backend service. |

-

Define the response and click Finish.

-

Debug the API.

On the APIs tab, click Debug. Click the Debug button in red background. If the status code

200is returned in the response result, the debugging is successful. Then go to the next step. Otherwise, rectify the fault by following the instructions provided in Error Codes. -

Publish the API.

On the APIs tab, click Publish Latest Version, retain the default option RELEASE, and click OK. When the exclamation mark in the upper left of the Publish button disappears, the publishing is successful. Then go to the next step. Otherwise, rectify the error indicated in the error message.

-

-

Call the API.

-

Bind independent domain names to the group of this API.

On the group details page, click the Group Information tab. The debugging domain name is only used for development and testing and can be accessed 1000 times a day. Bind independent domain names to expose APIs in the group.

Click Bind Independent Domain Name to bind registered public domain names. For details about how to bind a domain name, see Binding a Domain Name.

-

Copy the URL of the API.

On the APIs tab, copy the API URL. Open a browser and enter the URL. When the defined success response is displayed, the invocation is successful.

Figure 1 Copying the URL

Now, the CCE workload is opened by creating a load balance channel.

-

Method 2: Opening a CCE Workload by Importing It

- Import a CCE workload.

- Go to the APIG console, and choose Gateways in the navigation pane.

- Choose API Management -> API Groups.

- Choose Create API Group -> Import CCE Workload.

- Enter information about the CCE workload to import.

Table 7 Workload information

| Parameter | Description |

|---|---|

| Cluster | Select the created cluster. |

| Namespace | Select a namespace in the cluster. In this example, select default. |

| Workload Type | Select Deployment . This parameter must be the same as the type of the created workload. |

| Service Label Key | Select the pod label app and its value deployment-demo of the created workload. |

| Service Label Value | |

| Tag | Another pod label version=v1 of the workload is automatically selected. |

-

Configure API information.

Table 8 API information

| Parameter | Description |

|---|---|

| Request Path | API request path for prefix match. Default: /. In this example, retain the default value. |

| Port | Enter 80. This must be the same as the container port in the workload. |

| Authentication Mode | Default: None. |

| CORS | Disabled by default. |

| Timeout (ms) | Backend timeout. Default: 5000. |

-

Click OK. The CCE workload is imported, with an API group, API, and load balance channel generated.

-

View the generated API and load balance channel.

- View the generated API.

- Click the API group name, and then view the API name, request method, and publishing status on the APIs tab.

- Click the Backend Configuration tab and view the bound load balance channel.

- View the generated load balance channel.

- Choose API Management -> API Policies.

- On the Load Balance Channels tab, click the channel name to view details.

- Check that this load balance channel is the one bound to the API, and then go to the next step. If it is not, repeat the steps from the beginning.

- View the generated API.

-

Open the API.

Since importing a CCE workload already creates an API group and API, you only need to publish the API in an environment.

-

Debug the API.

On the APIs tab, click Debug. Click the Debug button in red background. If the status code

200is returned in the response result, the debugging is successful. Then go to the next step. Otherwise, rectify the fault by following the instructions provided in Error Codes. -

Publish the API.

On the APIs tab, click Publish Latest Version, retain the default option RELEASE, and click OK. When the exclamation mark in the upper left of the Publish button disappears, the publishing is successful. Then go to the next step.

-

-

Call the API.

-

Bind independent domain names to the group of this API.

On the group details page, click the Group Information tab. The debugging domain name is only used for development and testing and can be accessed 1000 times a day. Bind independent domain names to expose APIs in the group.

Click Bind Independent Domain Name to bind registered public domain names. For details about how to bind a domain name, see Binding a Domain Name.

-

Copy the URL of the API.

On the APIs tab, copy the API URL. Open a browser and enter the URL. When the defined success response is displayed, the invocation is successful.

Figure 2 Copying the URL

Now, the CCE workload has been opened by importing it.

-

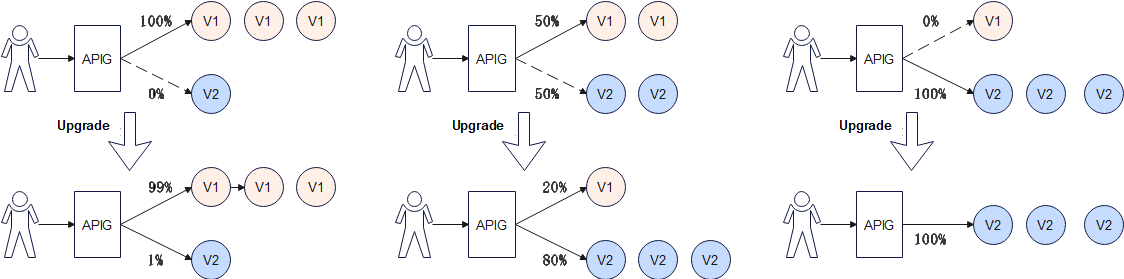

(Optional) Configuring Workload Labels for Grayscale Release

Grayscale release is a service release policy that gradually switches traffic from an early version to a later version by specifying the traffic distribution weight. Services are verified during release and upgrade. If a later version meets the expectation, you can increase the traffic percentage of this version and decrease that of the early version. Repeat this process until a later version accounts for 100% and an early version is down to 0. Then the traffic is successfully switched to the later version.

Figure 3 Grayscale release principle

CCE workloads are configured using the pod label selector for grayscale release. You can quickly roll out and verify new features, and switch servers for traffic processing. For details, see Using Services to Implement Simple Grayscale Release and Blue-Green Deployment.

The following describes how to smoothly switch traffic from V1 to V2 through grayscale release.

-

Create a workload, set a pod label with the same value as the app label of the preceding workload.

On the workload creation page, go to the Advanced Settings -> Labels and Annotations area, and set

app=deployment-demoandversion=v2. If you create a workload by importing a YAML file, add pod labels in this file. -

For the server group with pod label

version=v1, adjust the traffic weight.-

On the APIG console, choose Gateways in the navigation pane.

-

Choose API Management -> API Policies.

-

On the Load Balance Channels tab, click the name of the created channel.

-

In the Backend Server Address area, click Modify.

-

Change the weight to

100, and click OK.Weight is the percentage of traffic to be forwarded. All traffic will be forwarded to the pod IP addresses in server group server_group_v1.

-

-

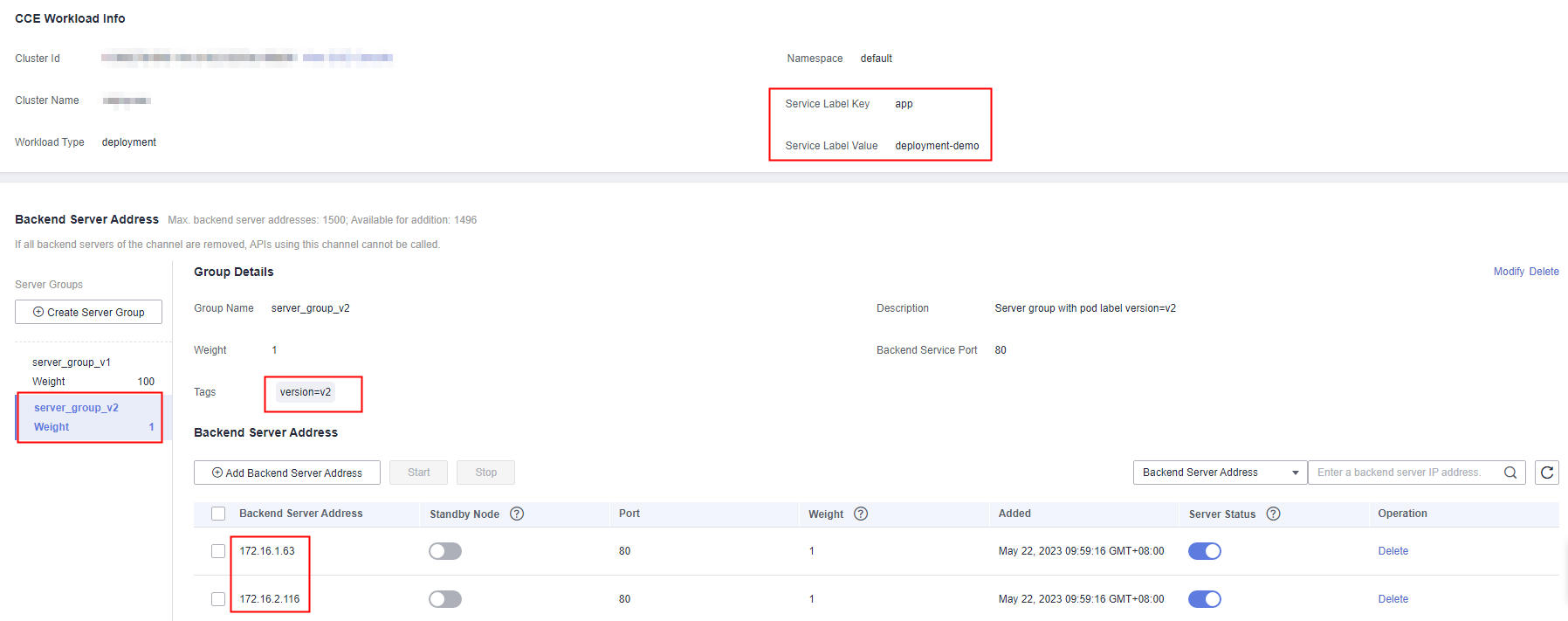

Create a server group with pod label

version=v2, then set the traffic weight.-

In the Backend Server Address area, click Create Server Group.

Table 9 Server group configuration

-

| Parameter | Description |

|---|---|

| Server Group Name | Enter server_group_v2. |

| Weight | Enter 1. |

| Backend Service Port | Enter 80. |

| Tag | Select pod label version=v2. |

-

Click OK.

-

Refresh the backend server addresses.

Refresh the page for the backend server addresses. The load balance channel automatically monitors the pod IP addresses of the workload and dynamically adds the addresses as backend server addresses. As shown in the following figure, tags

app=deployment-demoandversion=v2automatically match the pod IP addresses (backend server addresses) of the workload.Figure 4 Pod IP addresses automatically matched

100 of 101 (server group weight of total weight) traffic is distributed to server_group_v1, and the remaining to the later version of server_group_v2.

Figure 5 Click Modify in the upper right of the page.

-

Check that the new features released to V2 through grayscale release are running stably.

If the new version meets the expectation, go to 6. Otherwise, the new feature release fails.

-

Adjust the weights of server groups for different versions.

Gradually decrease the weight of server_group_v1 and increase that of server_group_v2. Repeat 5 to 6 until the weight of server_group_v1 becomes

0and that of server_group_v2 reaches100.

As shown in the preceding figure, all requests are forwarded to server_group_v2. New features are switched from workload deployment-demo of version=v1 to deployment-demo2 of version=v2 through grayscale release. (You can adjust the traffic weight to meet service requirements.)

-

Delete the backend server group server_group_v1 of version=v1.

Now all traffic has been switched to the backend server group of version=v2. You can delete the server group of version=v1.

-

Go to the load balance channel details page on the APIG console, delete all IP addresses of the server group of version=v1 in the Backend Server Address area.

-

Click Delete on the right of this area to delete the server group of version=v1.

The backend server group server_group_v2 of version=v2 is kept.

-